What Is a Terraform Module?

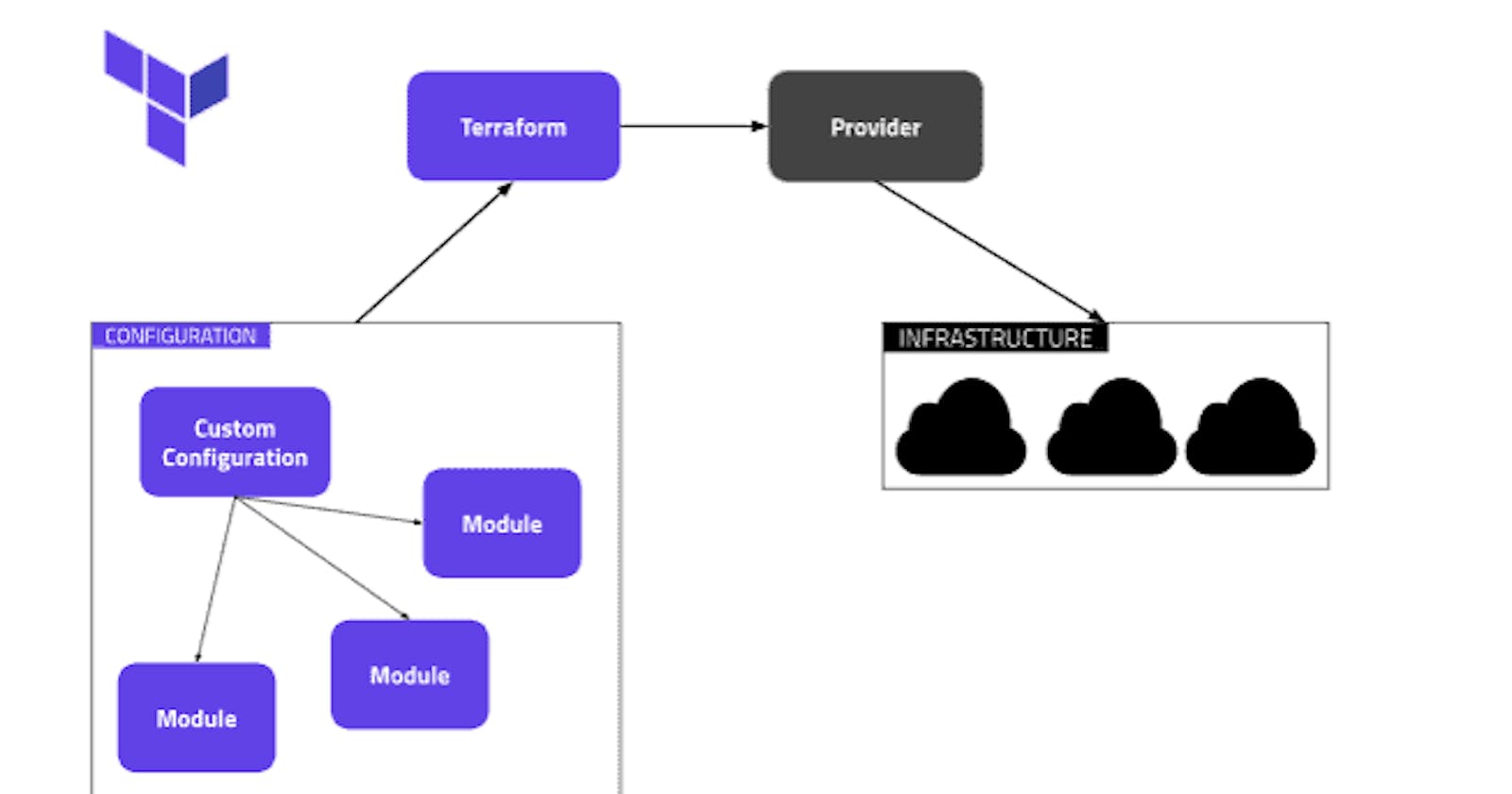

In Terraform, Modules are groups of .tf files that are kept in a different directory from the configuration as a whole. A module’s scope encompasses all of its resources. So, if the user needs information about the resources that a module creates, the module must be explicitly stated. To do this, declare an output on the module that exposes the necessary data and permits references to that output from outside the module.

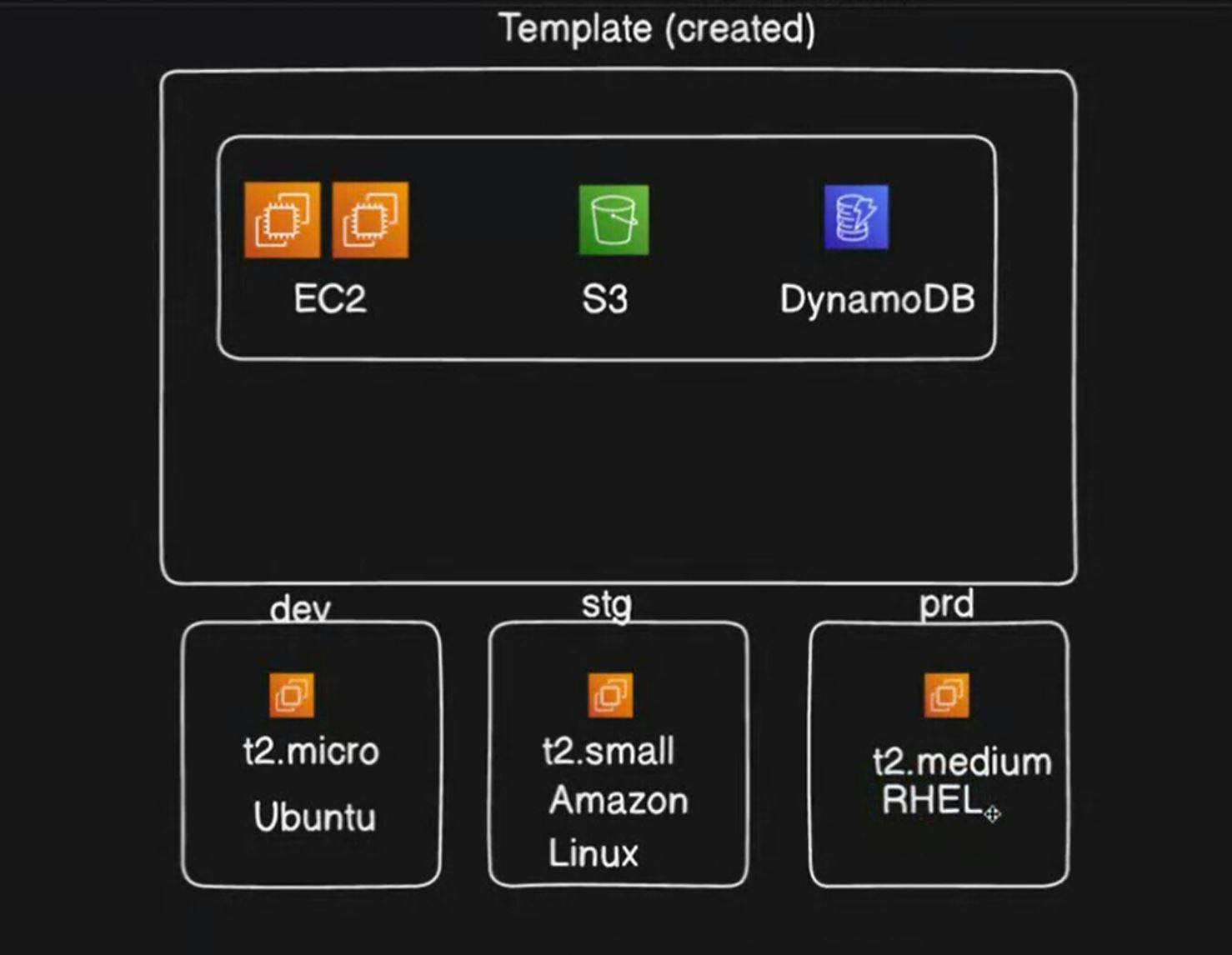

In this project, First, we will create an app template

then we will create 3 different modules as per industry standards, dev, stage, prod

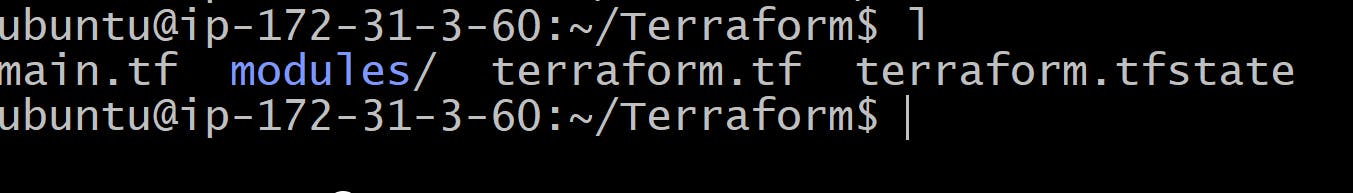

We are going to follow the below folder structure...

Our core terraform files will remain in the main project directory, we will create new directory modules, in which we will create an app template

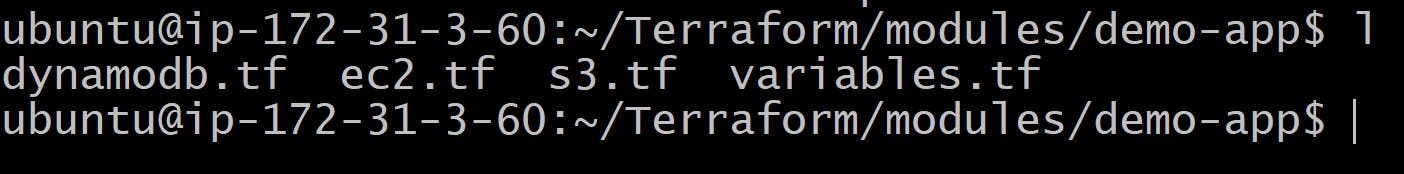

Our Modules directly will look like this...

We will create 3 separate terraform files for 3 different resources EC2, S3 Bucket, and Dynamo DB Table.

We will not hard-code any resource value, for that, we can use variables. By this, we can achieve code-reusability.

ec2.tf--->

resource "aws_instance" "module_demo"{

count = 2

ami = var.ami_id

instance_type = var.instance_type

tags = {

Name = "${var.env_name}-${var.instance_name}"

}

}

s3.tf-->

resource "aws_s3_bucket" "module_demo"{

bucket = "${var.env_name}-${var.bucket_name}"

}

dynamodb.tf-->

resource "aws_dynamodb_table" "module_demo_table"{

name = "${var.env_name}-${var.table_name}"

billing_mode = "PAY_PER_REQUEST"

hash_key = "emailID"

attribute {

name = "emailID"

type = "S"

}

}

variables.tf -->

variable "ami_id"{

type = string

}

variable "instance_type"{

type = string

}

variable "instance_name"{

type = string

}

variable "bucket_name" {

type = string

}

variable "table_name"{

type = string

}

variable "env_name"{

type = string

}

Now most import main.tf file-->

In this, we will first check for the dev-module, whether it is running fine or not, then we can add the other 2 modules.

#Dev-Infra

module "dev-demo-app"{

source = "./modules/demo-app"

env_name = "dev"

ami_id = "ami-0eba6c58b7918d3a1"

instance_type = "t2.micro"

instance_name = "module-demo-instance"

bucket_name = "module-demo-bucket"

table_name = "module-demo-table"

}

#Stage_Infra

#Prod-Infra

terraform.tf-->

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

}

# Configure the AWS Provider

provider "aws" {

region = "ap-northeast-1"

}

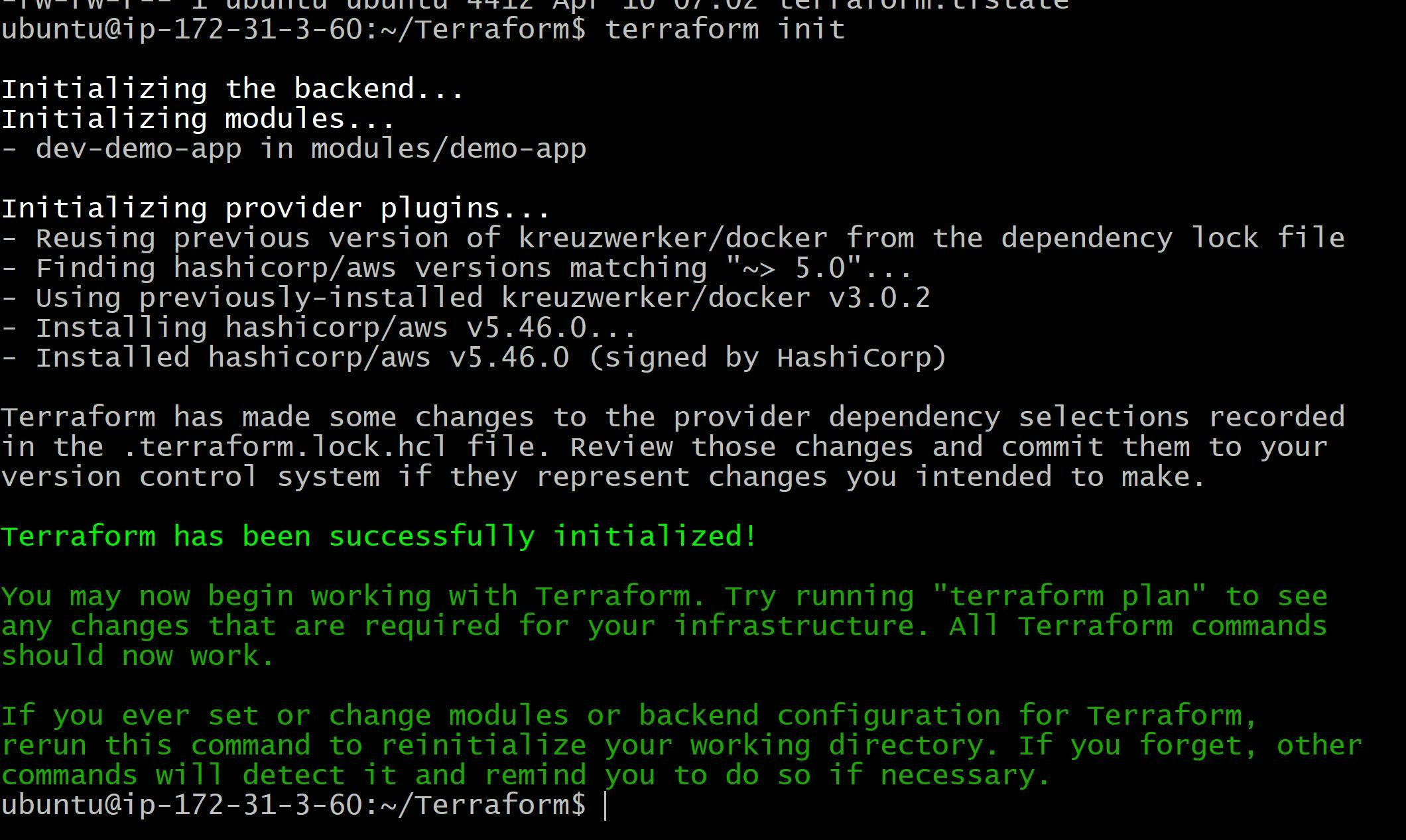

Now it's time to do terraform init...

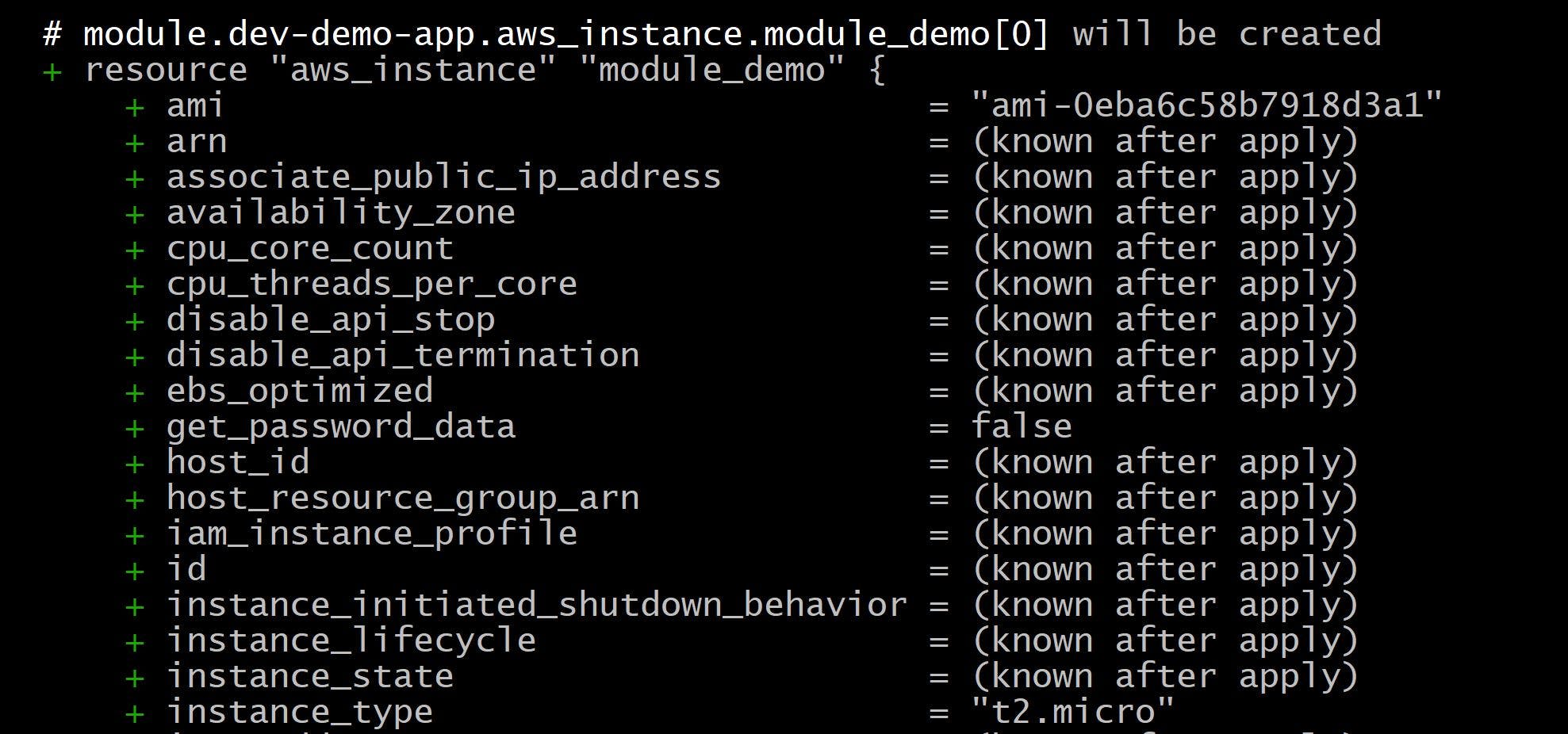

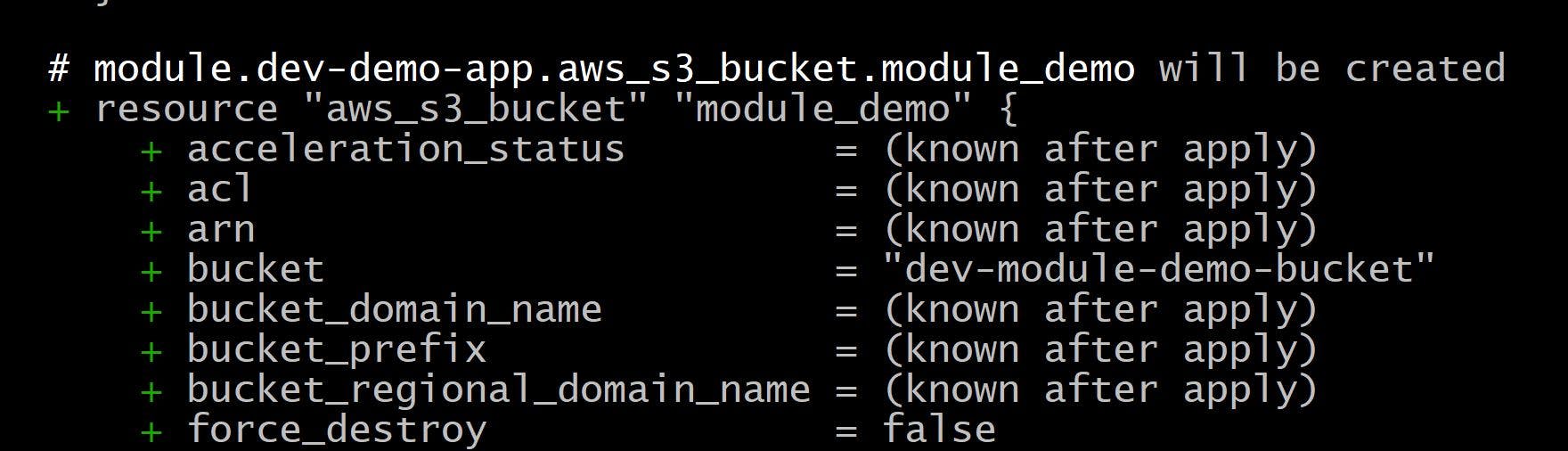

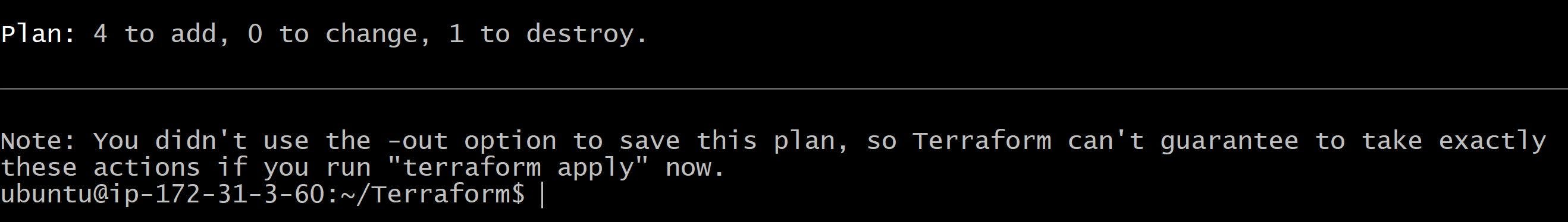

Now we do terraform plan to debug...

for ec2 instance...

for s3_bucket

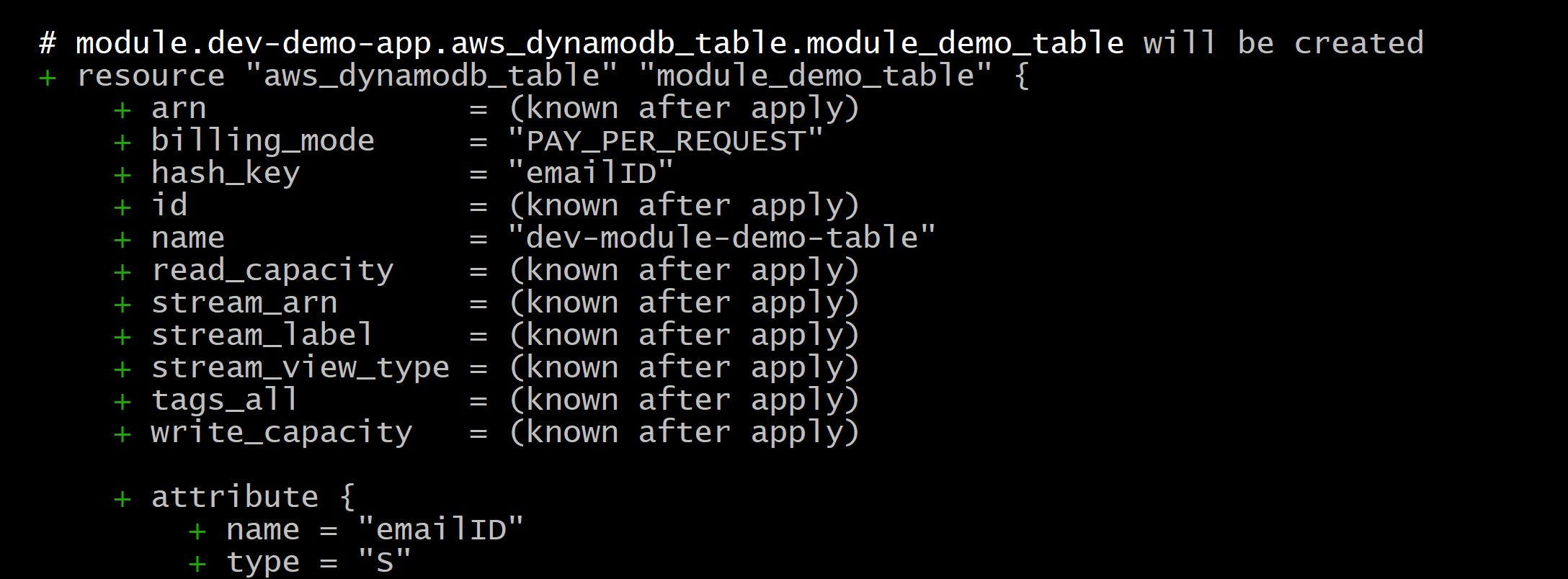

for dynamodb_table

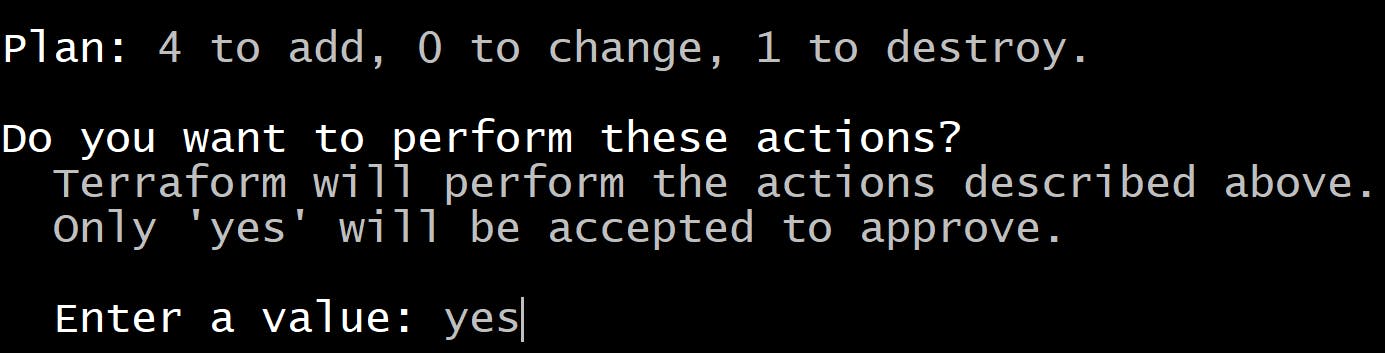

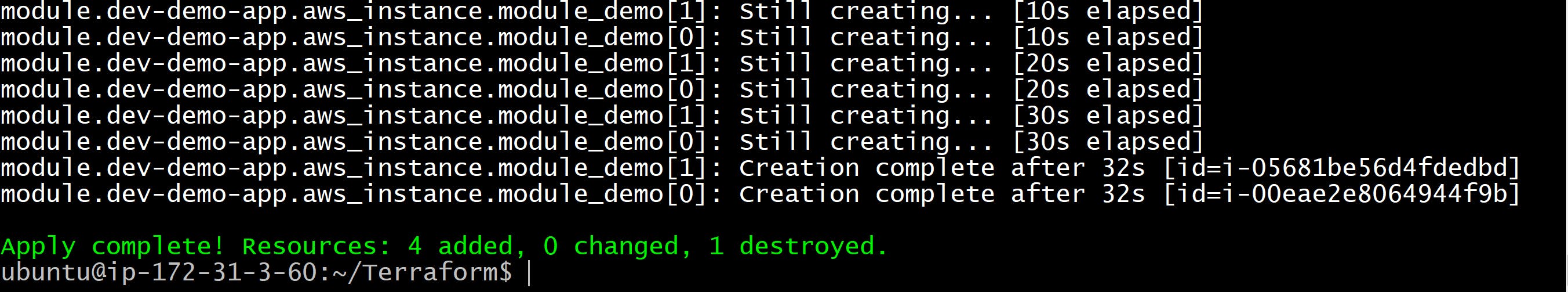

we do terraform apply now,

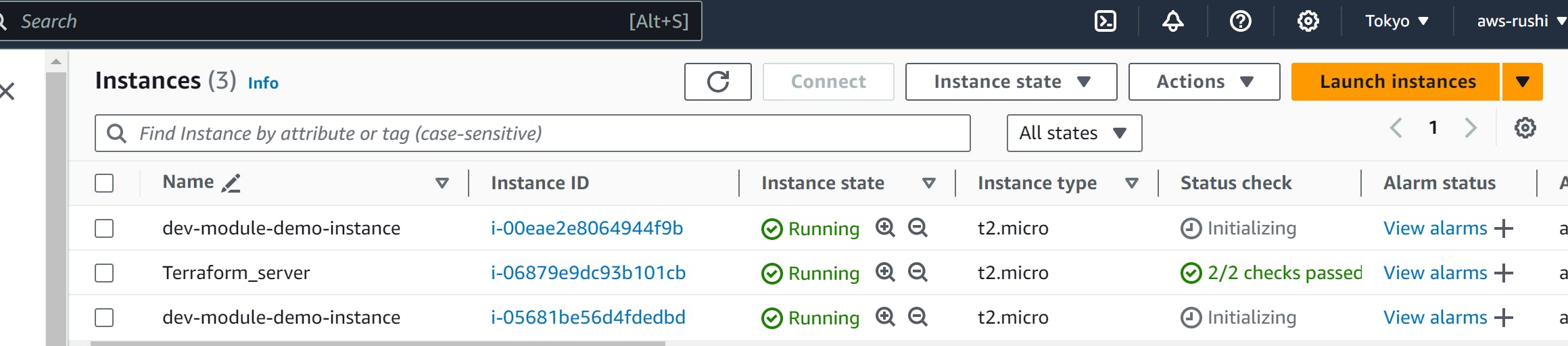

2 ec2-instances for dev-module created

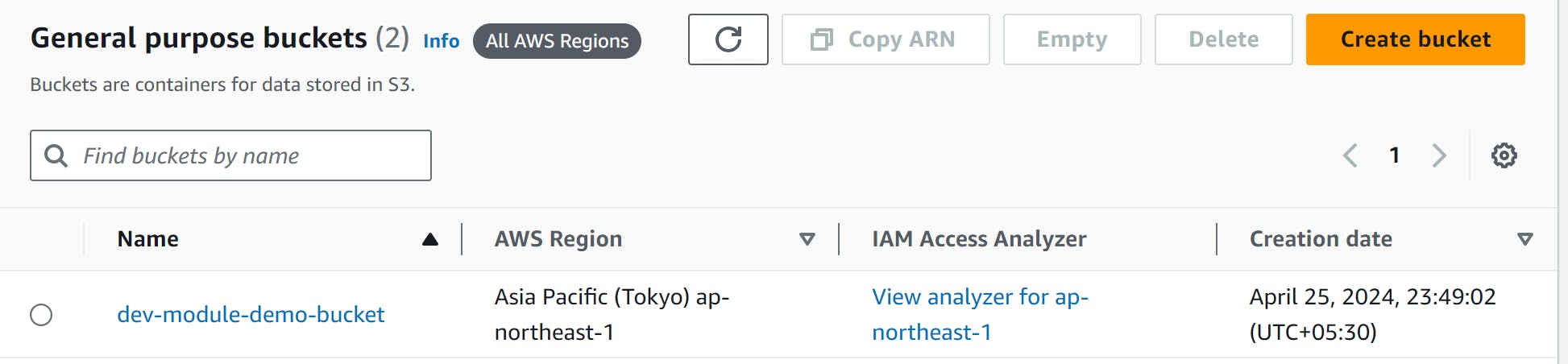

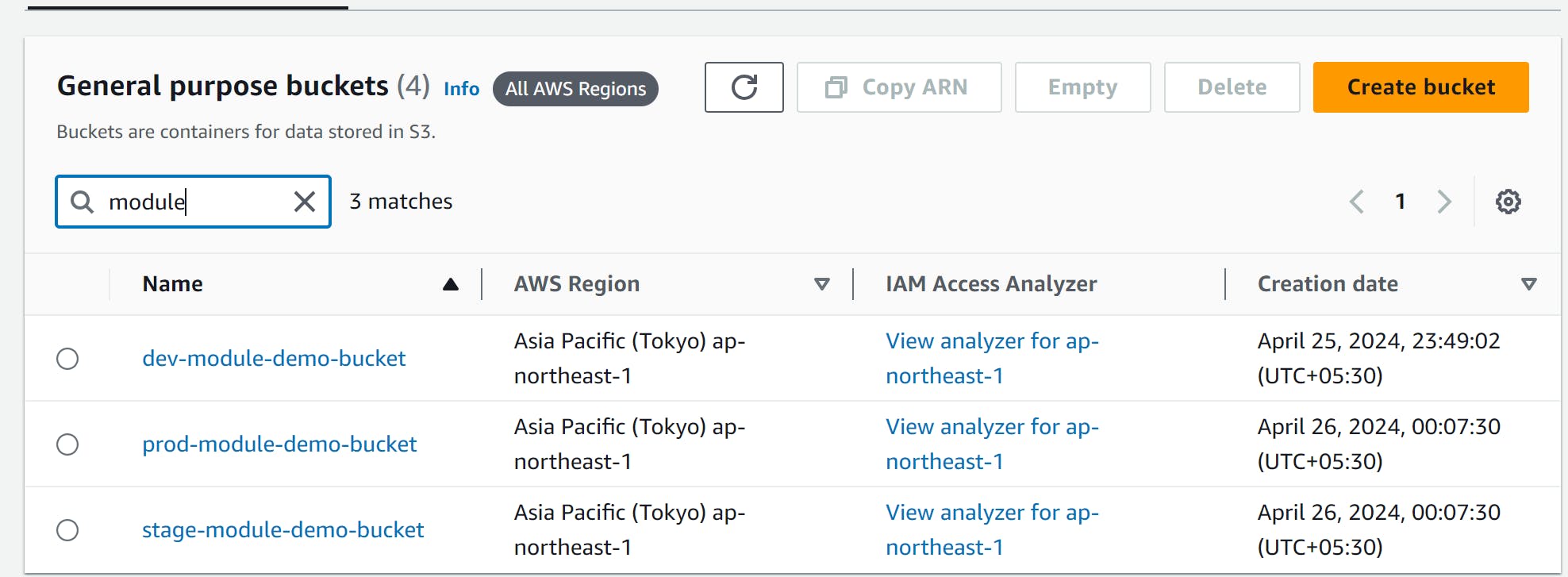

s3 bucket was created

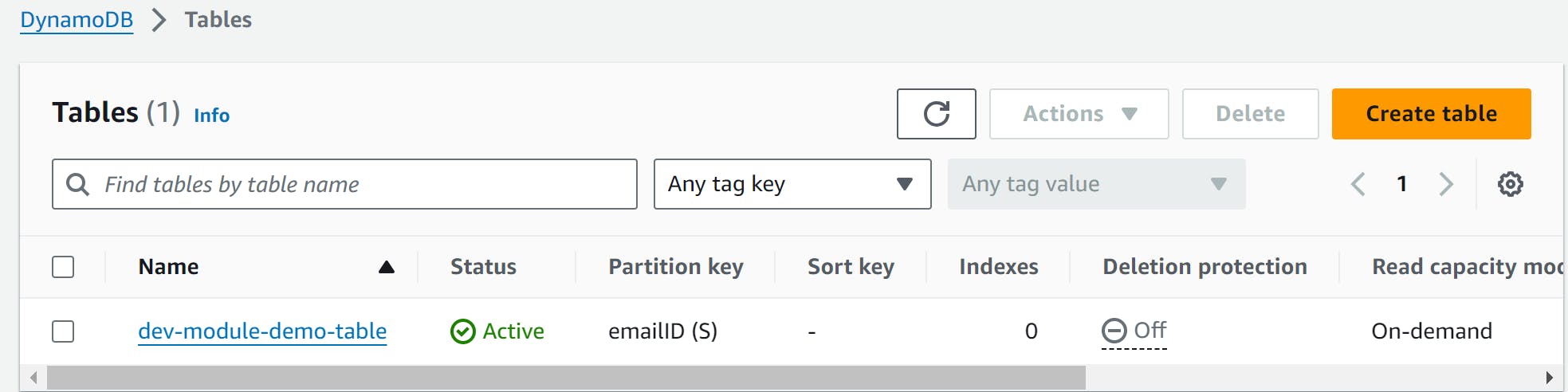

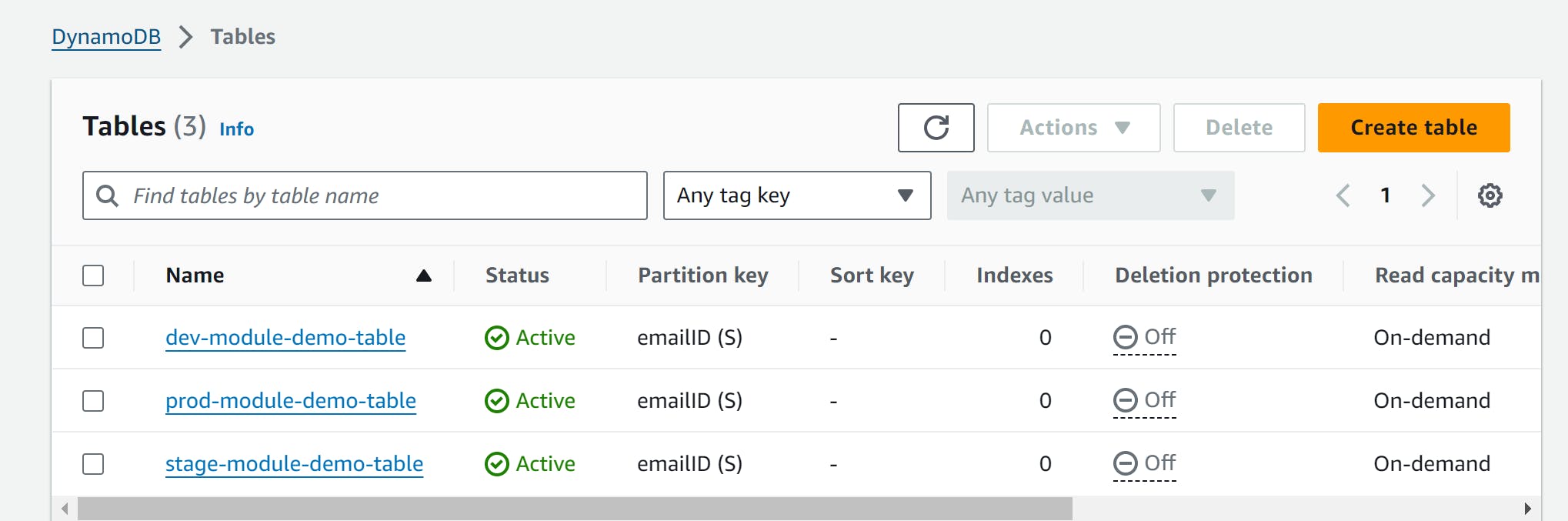

A dynamodb table was created...

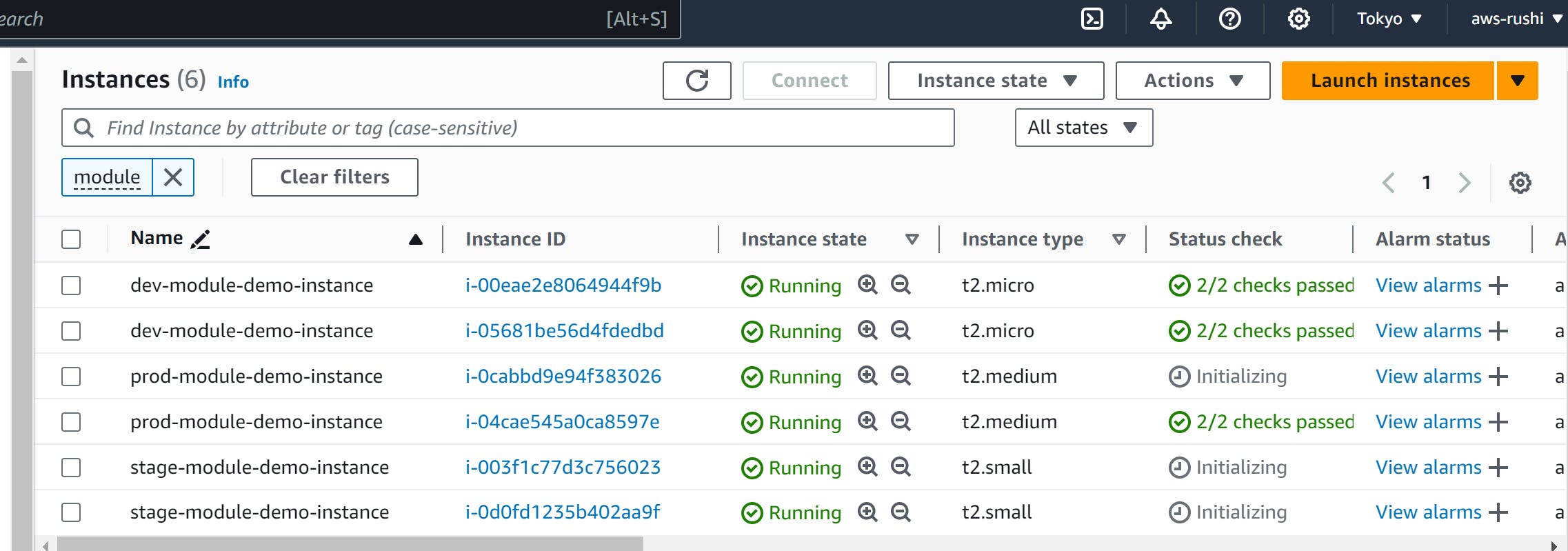

Now we can add stage & prod module in main.tf file...

#Dev-Infra

module "dev-demo-app"{

source = "./modules/demo-app"

env_name = "dev"

ami_id = "ami-0eba6c58b7918d3a1"

instance_type = "t2.micro"

instance_name = "module-demo-instance"

bucket_name = "module-demo-bucket"

table_name = "module-demo-table"

}

#Stage_Infra

module "stage-demo-app"{

source = "./modules/demo-app"

env_name = "stage"

ami_id = "ami-0c1de55b79f5aff9b"

instance_type = "t2.small"

instance_name = "module-demo-instance"

bucket_name = "module-demo-bucket"

table_name = "module-demo-table"

}

#Prod-Infra

module "prod-demo-app"{

source = "./modules/demo-app"

env_name = "prod"

ami_id = "ami-0014871499315f25a"

instance_type = "t2.medium"

instance_name = "module-demo-instance"

bucket_name = "module-demo-bucket"

table_name = "module-demo-table"

}

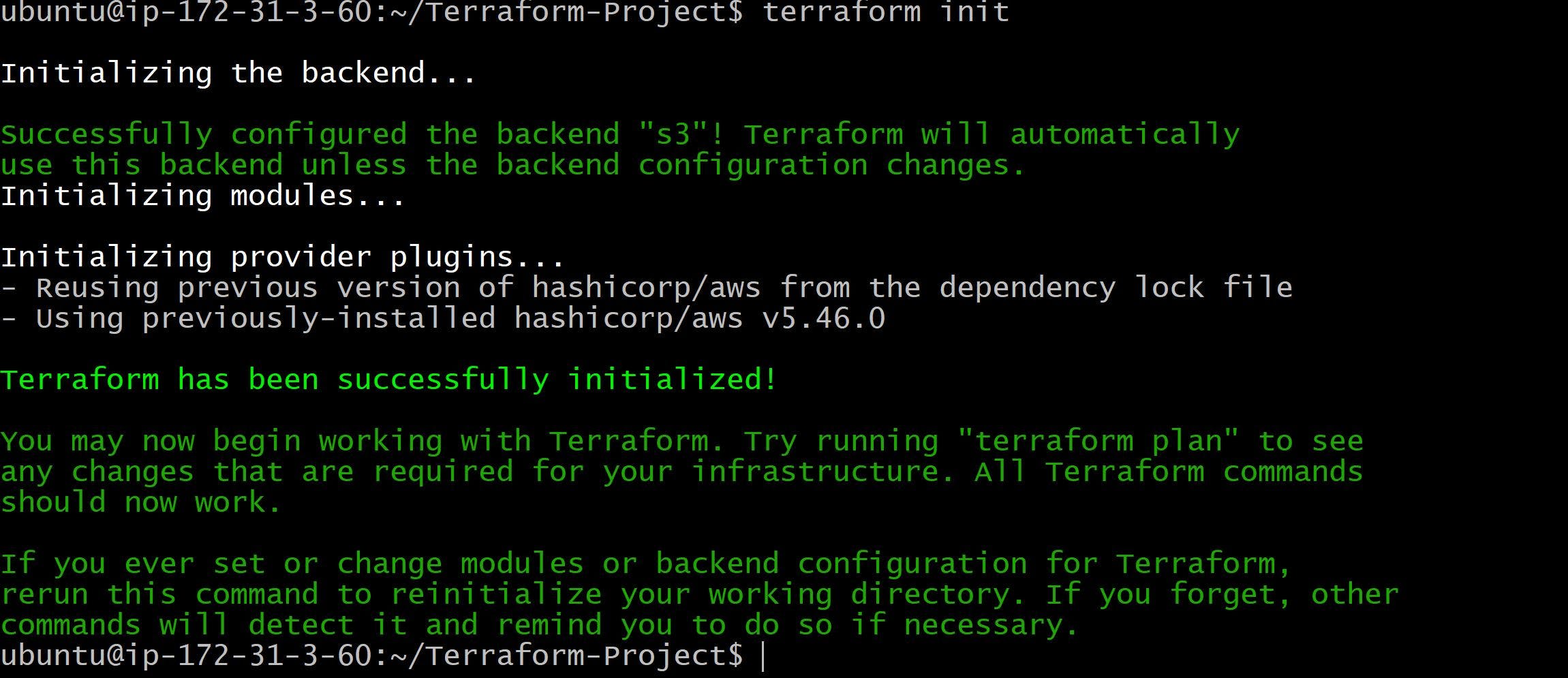

Need to run terraform init again, as we have added stage & prod modules in main.tf file.

Need of keeping terraform.tfstate file on remote...

If we store on a version control system, all resource details will be visible/accessible to anyone.

If we keep it locally, it will be restricted to only one person. When another person tries to change, the terraform state will not be consistent.

Using Terraform we will create S3 bucket for storing state file & Dynamodb Table for locking the state of file.

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

}

# Configure the AWS Provider

provider "aws" {

region = "ap-northeast-1"

}

resource "aws_s3_bucket" "backend-bucket"{

bucket = "module-demo-backend-bucket"

}

resource "aws_dynamodb_table" "backend_table"{

name = "backend-table"

billing_mode = "PAY_PER_REQUEST"

hash_key = "LockID"

attribute {

name = "LockID"

type = "S"

}

}

Now we add the backend {} block inside the terraform block of terraform.tf file of our project. Which will save our terraform.tfstate file in remote.

Now terraform.tf file look like...

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

backend "s3"{

bucket = "module-demo-backend-bucket"

dynamodb_table = "backend-table"

region = "ap-northeast-1"

key = "terraform.tfstate"

}

}

# Configure the AWS Provider

provider "aws" {

region = "ap-northeast-1"

}

Terraform Init...

initializing the backend...

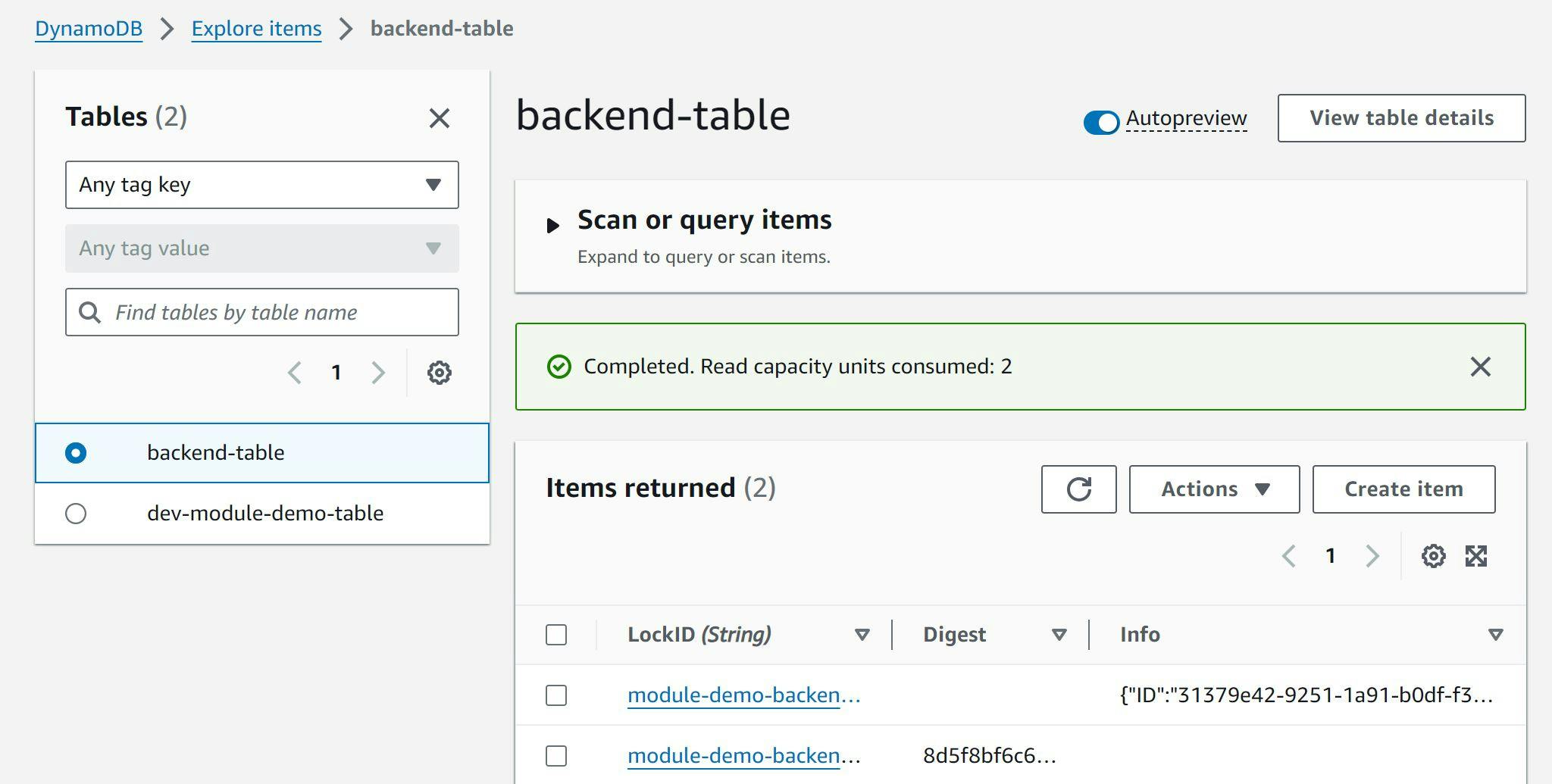

Terraform apply

terraform apply -target=module.dev-demo-app

You can see the below screenshot, as soon as we execute the Terraform apply,

one entry is generated in the Dynamodb table, which ensures the state-locking.

Thank You!